As your application grows, your database needs to keep up. PostgreSQL is incredibly powerful, but even the best database systems need thoughtful scaling strategies. In this post, I’ll walk you through the two main approaches to scaling PostgreSQL: vertical (scaling up) and horizontal (scaling out).

Table of Contents

- Understanding Database Growth Challenges

- Vertical Scaling: The Straightforward Approach

- When to Scale Vertically

- Practical Steps for Vertical Scaling

- Limitations of Vertical Scaling

- Horizontal Scaling: Distributing the Load

- Read Replicas: Offloading Select Queries

- Sharding: Dividing Your Data

- Connection Pooling: Managing Resources Efficiently

- Real-world Scaling Scenarios

- Monitoring and Knowing When to Scale

- Conclusion: Creating Your Scaling Strategy

Understanding Database Growth Challenges

Imagine you’ve opened a small neighborhood library. At first, one librarian can handle everything – checking books in and out, reshelving, and helping visitors. But as word spreads and more people visit, your lone librarian becomes overwhelmed. You face three main problems:

- More visitors than can be served (connection limits)

- Not enough shelf space (storage capacity)

- Taking too long to find books (query performance)

Your PostgreSQL database faces the same challenges as it grows. Let’s look at how to address them.

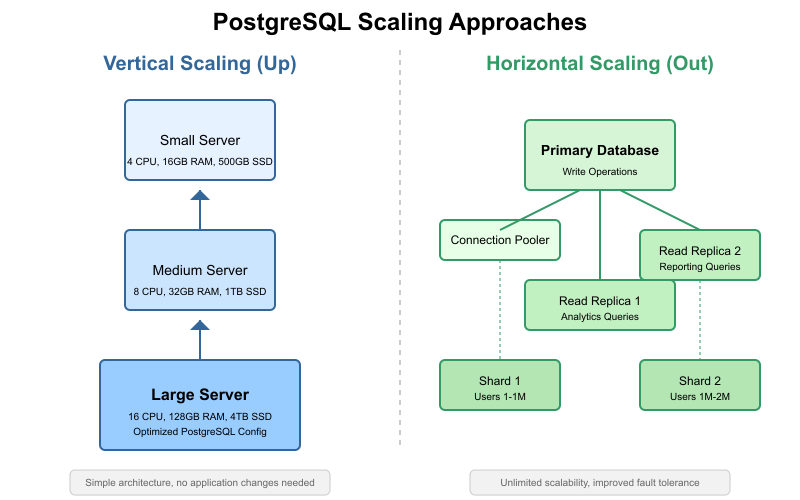

Vertical Scaling: The Straightforward Approach

Vertical scaling is like upgrading from a small building to a larger one with more shelves and hiring more staff. In database terms, it means increasing the resources on your existing database server.

When to Scale Vertically

Vertical scaling should generally be your first approach when:

- Your database is smaller than 1TB

- You’re experiencing resource constraints (CPU, memory, disk I/O)

- You want to maintain a simple architecture

Practical Steps for Vertical Scaling

Here’s how you might vertically scale a PostgreSQL database:

1. Upgrade Hardware Resources

Imagine your database server is showing signs of stress with CPU consistently above 70% utilization. You could upgrade from:

Current: 4 CPUs, 16GB RAM, 500GB SSD

Upgraded: 8 CPUs, 32GB RAM, 1TB SSD

2. Optimize PostgreSQL Configuration

PostgreSQL’s default settings are conservative. On a larger server, you’ll want to adjust parameters like:

# Before (default settings)

shared_buffers = 128MB

work_mem = 4MB

maintenance_work_mem = 64MB

effective_cache_size = 4GB

# After (optimized for 32GB RAM machine)

shared_buffers = 8GB

work_mem = 20MB

maintenance_work_mem = 1GB

effective_cache_size = 24GB

Think of shared_buffers as your librarian’s desk space – more space means more books can be processed at once without walking back to the shelves.

3. Tune Your Storage System

Storage often becomes a bottleneck before CPU or memory. Consider:

- Moving to faster SSDs or NVMe drives

- Using RAID configurations for better performance

- Implementing proper I/O scheduling

Limitations of Vertical Scaling

Like building a taller library, vertical scaling eventually hits limits:

- There’s a ceiling to how much hardware you can add to a single server

- Cost increases exponentially as you move to high-end hardware

- You still have a single point of failure

As our wise senior DBA would say: “You can’t solve every database problem by throwing hardware at it.”

Horizontal Scaling: Distributing the Load

Horizontal scaling is like opening multiple library branches across town instead of just building one massive library. You distribute the load across multiple servers.

Read Replicas: Offloading Select Queries

Let’s say 80% of your database workload is read operations (SELECT queries). You can create read replicas – copies of your database that stay in sync with the primary but only serve read operations.

How to set up a basic read replica:

- Configure streaming replication in

postgresql.confon your primary:

wal_level = replica

max_wal_senders = 10

max_replication_slots = 10

- Set up authentication in

pg_hba.conf:

host replication replicator 192.168.1.100/32 md5

- Create a base backup for the replica:

pg_basebackup -h primary_host -D /var/lib/postgresql/data -U replicator -P -v

- Configure the replica to connect to the primary in

recovery.conf:

primary_conninfo = 'host=primary_host port=5432 user=replicator password=secret'

Now your application can send read queries to replicas and write queries to the primary, like having reference librarians handling inquiries while the main librarian processes returns and checkouts.

Sharding: Dividing Your Data

Sharding divides your data across multiple independent database servers based on some logical division.

Imagine our library has grown so large we split our collection:

- Branch 1: Books with titles A-M

- Branch 2: Books with titles N-Z

Similarly, you might shard your user database by user_id ranges:

- Shard 1: user_id 1-1,000,000

- Shard 2: user_id 1,000,001-2,000,000

Simple sharding approach with foreign data wrappers:

- Create separate PostgreSQL instances for each shard

- On your application server or a coordinator database, create foreign tables:

CREATE EXTENSION postgres_fdw;

CREATE SERVER shard1 FOREIGN DATA WRAPPER postgres_fdw

OPTIONS (host 'shard1.example.com', port '5432', dbname 'userdb');

CREATE USER MAPPING FOR postgres SERVER shard1

OPTIONS (user 'postgres', password 'secret');

CREATE FOREIGN TABLE users_shard1 (

user_id integer,

username text,

email text

)

SERVER shard1 OPTIONS (table_name 'users');

- Create a view that unifies access:

CREATE VIEW all_users AS

SELECT * FROM users_shard1 WHERE user_id BETWEEN 1 AND 1000000

UNION ALL

SELECT * FROM users_shard2 WHERE user_id BETWEEN 1000001 AND 2000000;

Sharding requires careful planning but provides nearly unlimited horizontal scaling potential.

Connection Pooling: Managing Resources Efficiently

Each PostgreSQL connection consumes memory and OS resources. Connection pooling is like having an organized reception desk at your library that directs visitors efficiently.

Setting up PgBouncer, a popular connection pooler:

- Install PgBouncer:

sudo apt-get install pgbouncer

- Configure

/etc/pgbouncer/pgbouncer.ini:

[databases]

mydb = host=127.0.0.1 port=5432 dbname=mydb

[pgbouncer]

listen_addr = *

listen_port = 6432

auth_type = md5

auth_file = /etc/pgbouncer/userlist.txt

pool_mode = transaction

max_client_conn = 1000

default_pool_size = 20- Update your application to connect to PgBouncer instead of directly to PostgreSQL.

Connection pooling can help you support thousands of application connections with only dozens of actual database connections.

Real-world Scaling Scenarios

Scenario 1: E-commerce Platform

- Start with vertical scaling for the first few thousand users

- Add read replicas when product browsing increases

- Use connection pooling when checkout processes spike during sales

- Consider sharding by product category when inventory exceeds 10 million items

Scenario 2: SaaS Application

- Begin with a powerful single server

- Implement read replicas for reporting features

- Separate databases for different components (auth, billing, content)

- Shard when customer data grows beyond 500GB

Monitoring and Knowing When to Scale

Before scaling, understand what’s actually limiting your performance:

Key Metrics to Monitor:

- CPU usage consistently above 70%

- Disk I/O wait times increasing

- Free memory regularly dropping below 20%

- Query execution times increasing

- Connection count approaching max_connections

Use tools like:

pg_stat_statementsto identify slow queries- Prometheus and Grafana for visualizing trends

- Regular EXPLAIN ANALYZE on critical queries

As our experienced DBA would say: “Measure twice, scale once.”

Conclusion: Creating Your Scaling Strategy

The best PostgreSQL scaling strategy combines both approaches:

- Start with vertical scaling for simplicity

- Add read replicas for read-heavy workloads

- Implement connection pooling early

- Consider sharding only when absolutely necessary

Remember that scaling isn’t just about hardware—query optimization, proper indexing, and regular maintenance are equally important. Often, a well-tuned 4-core server will outperform a poorly configured 32-core monster.

Most importantly, plan your scaling strategy before you desperately need it. The best time to think about scaling is when your system is performing well, not when it’s already under stress.

Happy scaling!