In today’s data-driven world, ensuring your database is always available and protected against data loss is crucial. Whether you’re running a small application or managing enterprise systems, understanding PostgreSQL replication and high availability can make the difference between smooth operations and costly downtime.

Introduction: Why Replication Matters

Imagine you’re running an online store. Every order, every customer, and every product detail lives in your PostgreSQL database. Now imagine your database server fails during Black Friday sales. Without proper planning, your business comes to a grinding halt, customers get frustrated, and you lose revenue with every passing minute.

Database replication and high availability strategies are like insurance policies for your data. They ensure that when (not if) something goes wrong with your primary database, your applications keep running and your data remains safe.

Understanding PostgreSQL Replication

What Is Database Replication?

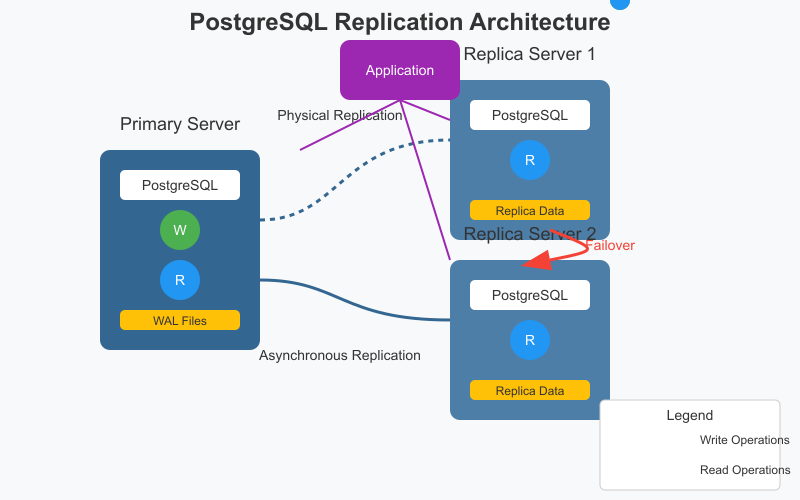

At its core, database replication means maintaining identical copies of your database on multiple servers. These copies are kept in sync, typically with changes flowing from one designated primary server to one or more replica servers.

Think of it like taking constant backups, except these “backups” are live, up-to-date, and ready to use at a moment’s notice.

Primary vs. Replica: The Master-Apprentice Relationship

In a typical PostgreSQL replication setup:

- Primary Server: This is the “master” that handles all write operations (INSERT, UPDATE, DELETE). Think of it as the authoritative source of truth.

- Replica Servers: These are the “apprentices” that maintain copies of the primary’s data. They can handle read operations, spreading the load and improving performance.

It’s like a senior chef (primary) who creates recipes while apprentice chefs (replicas) follow those recipes precisely, allowing the restaurant to serve more customers simultaneously.

Physical vs. Logical Replication

PostgreSQL offers two fundamentally different types of replication:

Physical Replication

Physical replication (also called streaming replication) copies the actual data files and WAL (Write-Ahead Log) entries. It’s like photocopying pages from a book—exact duplicates with no interpretation needed.

Advantages:

- Simple to set up

- Replicates the entire database cluster

- Lower overhead

Disadvantages:

- All-or-nothing approach (can’t replicate just specific tables)

- Replica servers are read-only

- Primary and replicas must run identical PostgreSQL versions

Logical Replication

Logical replication works at the SQL level, replicating the changes rather than the data files. It’s like someone reading the book and then writing down the story in their own handwriting—the content is the same, but the implementation can differ.

Advantages:

- Can replicate specific tables or databases

- Can replicate between different PostgreSQL versions

- Replicas can be writable (for non-replicated tables)

Disadvantages:

- More complex setup

- Higher overhead

- Doesn’t replicate schema changes by default

Synchronous vs. Asynchronous: The Time Factor

Asynchronous Replication

With asynchronous replication (the default), the primary server doesn’t wait for replicas to confirm they’ve applied a change before reporting success to the client.

It’s like sending a text message—you know it’s sent, but you don’t know immediately if it was received.

Pros: Fast performance as the primary doesn’t wait Cons: Possibility of data loss if the primary fails before changes reach replicas

Synchronous Replication

With synchronous replication, the primary waits for confirmation from one or more replicas before considering a transaction complete.

This is like sending a certified letter—you get confirmation when it’s delivered, but the sending process takes longer.

Pros: Guaranteed data consistency between primary and synchronized replicas Cons: Higher latency for write operations

Setting Up Basic Replication

Let’s walk through setting up basic streaming replication in PostgreSQL. I’ll keep it simple, focusing on the core concepts.

Prerequisites

- Two PostgreSQL servers (same version)

- Network connectivity between them

- Adequate disk space on both

Step 1: Configure the Primary Server

Edit the postgresql.conf file on your primary server:

# Enable replication

wal_level = replica

max_wal_senders = 10 # Maximum number of concurrent connections from replica servers

wal_keep_segments = 64 # Keep this many WAL segments for replicas (in PostgreSQL 13+, use wal_keep_size instead)

# For better performance

synchronous_commit = off # Default is 'on'. Set to 'off' for better performance at the cost of potential data loss

Next, edit pg_hba.conf to allow the replica to connect:

# Allow replication connections from the replica server

host replication replicator 192.168.1.2/32 md5

(Replace 192.168.1.2 with your replica’s IP address)

Create a replication user:

CREATE ROLE replicator WITH LOGIN REPLICATION PASSWORD 'strongpassword';

Restart PostgreSQL to apply these changes.

Step 2: Create a Base Backup for the Replica

On the replica server, stop PostgreSQL and clear the data directory. Then, from the replica, run:

pg_basebackup -h primary_server_ip -D /var/lib/postgresql/data -U replicator -P -v

Step 3: Configure the Replica Server

Create a recovery.conf file (PostgreSQL 11 or earlier) or a standby.signal file (PostgreSQL 12+) in the data directory.

For PostgreSQL 12 and newer:

- Create an empty file called

standby.signalin the data directory - Add these settings to

postgresql.conf:

primary_conninfo = 'host=primary_server_ip port=5432 user=replicator password=strongpassword'

hot_standby = on # Allows read-only queries on replica

Start PostgreSQL on the replica.

Step 4: Verify Replication

On the primary server, check replication status:

SELECT client_addr, state, sent_lsn, write_lsn, flush_lsn, replay_lsn

FROM pg_stat_replication;

You should see your replica server listed with “streaming” state.

On the replica, verify it’s in recovery mode:

SELECT pg_is_in_recovery();

This should return true if the replica is properly set up.

High Availability Concepts

High availability is about keeping your database service running even when individual components fail. It goes beyond just replication.

Key High Availability Metrics

Recovery Time Objective (RTO)

RTO is the maximum acceptable time your database can be down. For example, if your RTO is 5 minutes, your system should be operational within 5 minutes after a failure.

Recovery Point Objective (RPO)

RPO represents the maximum amount of data you can afford to lose. With synchronous replication, your RPO can be zero (no data loss). With asynchronous replication, your RPO depends on how frequently changes are applied to replicas.

Failover: The Critical Moment

Failover is the process of promoting a replica to become the new primary when the original primary fails. It can be:

Manual Failover: An administrator runs commands to promote a replica. Automatic Failover: Software detects the primary failure and promotes a replica automatically.

The failover process typically involves:

- Detecting the primary server failure

- Selecting the most suitable replica to promote

- Promoting that replica to primary status

- Reconfiguring the application to use the new primary

- Reestablishing replication with other replicas

Common High Availability Solutions

Built-in PostgreSQL Solutions

Streaming Replication with Manual Failover This is the simplest solution, but requires manual intervention during failures.

To promote a replica to primary, run:

SELECT pg_promote(); -- PostgreSQL 12+

Or for earlier versions:

pg_ctl promote

Third-party Tools

Several tools can manage automatic failover:

Patroni Patroni is a template for high availability PostgreSQL clusters using consensus tools like ZooKeeper, etcd, or Consul.

# Sample simplified Patroni configuration

scope: postgres-cluster

name: node1

restapi:

listen: 0.0.0.0:8008

postgresql:

listen: 0.0.0.0:5432

data_dir: /var/lib/postgresql/data

etcd:

host: 127.0.0.1:2379

pgpool-II Pgpool-II provides connection pooling, load balancing, and watchdog capabilities for automatic failover.

repmgr Repmgr simplifies the setup and management of replication and failover.

Cloud-based Solutions

Most cloud providers offer managed PostgreSQL with built-in high availability:

- AWS RDS for PostgreSQL with Multi-AZ deployments

- Azure Database for PostgreSQL with zone-redundant high availability

- Google Cloud SQL for PostgreSQL with high availability configuration

These services handle replication, monitoring, and failover for you, reducing operational overhead.

Best Practices for PostgreSQL Replication

Performance Considerations

- Server Locations: Place replicas geographically close to their clients to reduce latency for read operations.

- Hardware Balance: Ensure replica servers have similar specifications to the primary to avoid performance degradation during failover.

- Network Bandwidth: Ensure sufficient bandwidth between primary and replicas, especially for write-heavy workloads.

Security Recommendations

- Encryption: Use SSL for replication connections to protect data in transit.

- Network Isolation: Place replication traffic on a private, isolated network when possible.

- Strong Authentication: Use strong passwords or certificate authentication for replication connections.

Maintenance Tips

- Regular Testing: Test your failover process regularly to ensure it works when needed.

- Monitoring: Set up alerts for replication lag, which indicates replicas falling behind the primary.

- Backup Beyond Replication: Remember that replication is not a backup substitute—maintain regular backups as well.

Troubleshooting Common Issues

Replication Lag

Replication lag occurs when replicas fall behind in applying changes from the primary. Common causes include:

- Insufficient hardware on replicas

- Network bandwidth limitations

- Heavy write load on the primary

- Long-running queries on replicas

Solution: Monitor lag using:

SELECT now() - pg_last_xact_replay_timestamp() AS replication_lag;

Improve hardware, network, or consider more replicas to distribute read load.

Split-brain Scenarios

Split-brain occurs when multiple servers believe they’re the primary, typically after network partitioning.

Solution: Implement a consensus mechanism (like Patroni with etcd) that ensures only one server can be primary at any time.

Connection Problems

If replication fails to establish or breaks frequently, check:

- Network connectivity between servers

- Firewall rules allowing PostgreSQL ports

- Proper

pg_hba.confentries - Correct replication user credentials

Conclusion and Next Steps

PostgreSQL replication and high availability are powerful tools that protect your data and ensure continuous service. While the concepts may seem complex at first, starting with basic streaming replication gives you immediate benefits and a foundation to build upon.

As you become more comfortable, explore more advanced solutions like logical replication or automatic failover tools. Remember that the best solution depends on your specific requirements for data consistency, availability, and operational complexity.

Where to Go From Here

- Practice setting up replication in a test environment

- Explore monitoring tools like Prometheus and Grafana for replication metrics

- Learn about point-in-time recovery to complement your high availability strategy

- Consider exploring Postgres-specific cloud solutions if managing infrastructure isn’t your core business

With these foundations, you’re well on your way to building robust, highly available PostgreSQL databases for your applications.