Introduction

Hello there! If you’re reading this, you’re probably taking your first steps into the world of PostgreSQL database administration. One of the most important skills you’ll need to develop is understanding how to tune your PostgreSQL configuration for different types of workloads.

Think of your PostgreSQL database as a car engine. Just like you wouldn’t use the same engine settings for a city commute and a cross-country road trip, your database needs different configurations depending on what you’re asking it to do.

In this guide, I’ll walk you through the essentials of PostgreSQL configuration tuning in a way that hopefully makes sense, even if you’re just starting out. By the end, you’ll have a solid understanding of which knobs to turn for different scenarios.

Table of Contents

- Understanding Your Workload

- Memory Parameters

- CPU Parameters

- Storage Parameters

- Workload-Specific Configurations

- OLTP (Online Transaction Processing)

- OLAP (Online Analytical Processing)

- Mixed Workloads

- Monitoring and Adjusting

- Practical Examples

- Conclusion

Understanding Your Workload

Before you start tweaking any configuration parameters, you need to understand what kind of workload your database will be handling. Let me explain the main types:

- OLTP (Online Transaction Processing): Think of this as lots of small, quick operations. A banking application processing thousands of small transactions would be a classic example. These workloads need quick response times.

- OLAP (Online Analytical Processing): These are your big, complex queries that crunch through lots of data. Think of a business intelligence application that generates monthly reports by analyzing millions of rows of data.

- Mixed Workloads: Many real-world applications have both OLTP and OLAP characteristics, which makes tuning more nuanced.

Remember: Knowing your workload is like knowing your destination before a journey. It determines everything else!

Memory Parameters

The most impactful tuning you can do often involves memory allocation. Let’s look at some key parameters:

shared_buffers

This is PostgreSQL’s main memory area for caching data. Think of it as your database’s short-term memory.

# Default is typically 128MB

shared_buffers = 2GB # For a server with 8GB RAM

Rule of thumb: Start with 25% of your server’s RAM for dedicated database servers.

For OLTP workloads with many small transactions, a larger shared_buffers helps because the same data gets accessed frequently.

For OLAP workloads, you might not benefit as much from extremely large values since analytical queries often scan through more data than can fit in memory anyway.

work_mem

This parameter determines how much memory PostgreSQL allocates for sorting and hash operations.

# Default is typically 4MB

work_mem = 32MB # Adjusted higher for a system with plenty of RAM

Rule of thumb: Be careful increasing this! Each operation can use this much memory, and multiple operations can run in parallel.

For OLTP: Keep this relatively low (4-16MB) to prevent memory pressure from many concurrent operations.

For OLAP: Set higher (32-256MB) to help with those complex sorts and joins in your analytical queries.

maintenance_work_mem

This is used for maintenance operations like VACUUM, CREATE INDEX, etc.

# Default is typically 64MB

maintenance_work_mem = 256MB

Rule of thumb: Set this higher than work_mem since these operations benefit from more memory and typically aren’t running concurrently.

CPU Parameters

Now let’s talk about how to tune PostgreSQL for your CPU resources:

max_connections

This isn’t strictly a CPU parameter, but it affects CPU usage significantly.

# Default is typically 100

max_connections = 200

Rule of thumb: Don’t set this too high! Each connection consumes resources. For OLTP workloads, you might need more connections. For OLAP, fewer but more powerful connections are typically better.

max_worker_processes and max_parallel_workers

These control PostgreSQL’s ability to parallelize operations.

# Defaults often need adjustment

max_worker_processes = 8

max_parallel_workers = 8

max_parallel_workers_per_gather = 4

Rule of thumb: Set based on the number of CPU cores available. For OLAP workloads that benefit from parallelism, set these higher.

Storage Parameters

How PostgreSQL interacts with your storage system matters a lot:

effective_io_concurrency

This parameter helps PostgreSQL optimize disk access patterns.

# Default is 1

effective_io_concurrency = 200 # For SSDs

Rule of thumb: For HDDs, keep this low (1-4). For SSDs or systems with RAID arrays, set much higher (100-300).

random_page_cost

This tells PostgreSQL how expensive it is to fetch a random page from disk compared to sequential access.

# Default is 4.0

random_page_cost = 1.1 # For SSDs

Rule of thumb: For SSDs, use values between 1.1 and 2.0. For traditional HDDs, the default of 4.0 is reasonable.

Workload-Specific Configurations

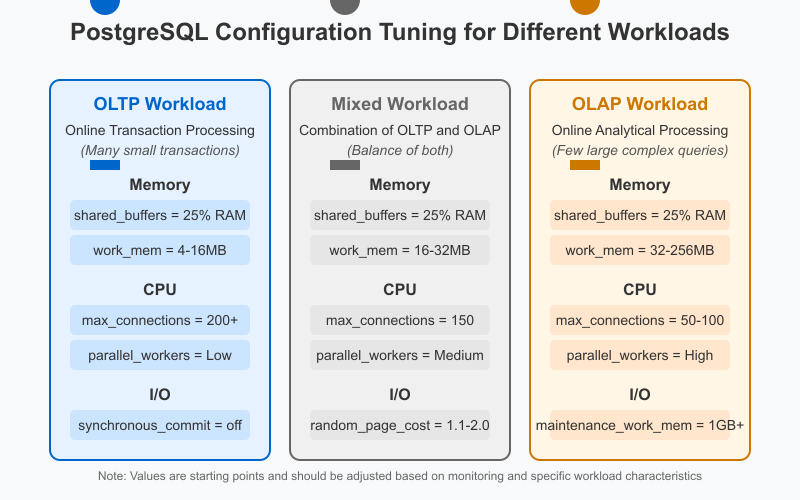

Now, let’s put it all together with some starting configurations for different workloads:

OLTP (Online Transaction Processing)

For systems handling many small, concurrent transactions:

shared_buffers = 25% of RAM

work_mem = 4-16MB

effective_cache_size = 75% of RAM

maintenance_work_mem = 256MB

random_page_cost = 1.1 (for SSDs)

effective_io_concurrency = 200 (for SSDs)

max_connections = higher (200+)

commit_delay = 10 (if you have many transactions committing around the same time)

synchronous_commit = off (if you can tolerate small data loss risk for performance)

OLAP (Online Analytical Processing)

For systems running complex analytical queries:

shared_buffers = 25% of RAM

work_mem = 32-256MB (be careful with this!)

effective_cache_size = 75% of RAM

maintenance_work_mem = 1GB or more

max_parallel_workers_per_gather = 4-8 (depending on CPU cores)

random_page_cost = 1.1 (for SSDs)

cpu_tuple_cost = 0.03 (slightly higher than default)

max_connections = lower (50-100)

Mixed Workloads

Many systems need to handle both transactional and analytical workloads:

shared_buffers = 25% of RAM

work_mem = 16-32MB

effective_cache_size = 75% of RAM

maintenance_work_mem = 512MB

max_parallel_workers_per_gather = 2-4

random_page_cost = 1.1 (for SSDs)

effective_io_concurrency = 200 (for SSDs)

Monitoring and Adjusting

Configuration tuning isn’t a “set it and forget it” task. You need to monitor your database’s performance and adjust accordingly.

Tools to help you monitor:

pg_stat_statementsextensionexplain analyzefor query performance- External monitoring tools like Prometheus with Grafana

If you’re seeing:

- High CPU usage: Look at query optimization, connection pooling

- High disk I/O: Review your indexing strategy and storage parameters

- Memory pressure: Adjust your memory parameters

Practical Examples

Let’s look at a real-world example. Imagine you’re running a small e-commerce site on a server with 16GB of RAM and SSDs:

# postgresql.conf for e-commerce application (mixed workload)

shared_buffers = 4GB # 25% of RAM

work_mem = 16MB # Moderate value for mixed workload

maintenance_work_mem = 512MB # Generous for maintenance tasks

effective_cache_size = 12GB # 75% of RAM

random_page_cost = 1.1 # Using SSDs

effective_io_concurrency = 200 # For SSDs

max_connections = 150 # Moderate connections

max_parallel_workers = 8 # Assuming 8 CPU cores

max_parallel_workers_per_gather = 4 # Half of workers

During normal operations, this works well. But during your end-of-month reporting:

# Temporary adjustment for monthly reporting

work_mem = 64MB # Increased for complex reports

max_parallel_workers_per_gather = 6 # More parallelism

Conclusion

PostgreSQL configuration tuning is both an art and a science. Start with understanding your workload, apply the rule-of-thumb settings for that workload type, monitor performance, and adjust as needed.

Remember that no single configuration is perfect for all situations. The best DBAs develop an intuition for which parameters to adjust based on observed performance metrics.

Don’t be afraid to experiment in your development and staging environments before making changes to production. And always, always make one change at a time so you can see its impact clearly.

Happy tuning!