Introduction

PostgreSQL has established itself as the most advanced open-source relational database management system available today. Its robust architecture combines reliability, data integrity, and extensibility into a powerful database solution trusted by organizations of all sizes. In this comprehensive guide, we’ll explore the architectural components that make PostgreSQL exceptional, with practical examples to solidify your understanding.

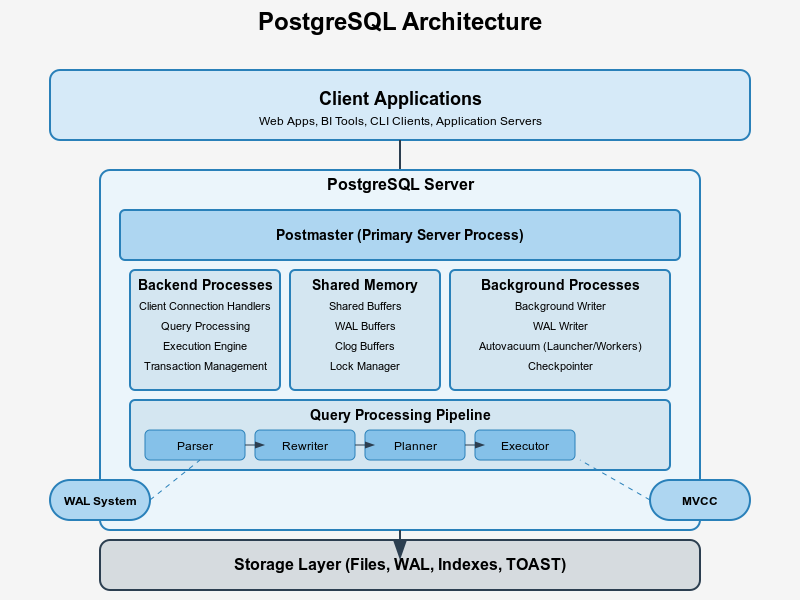

Visualizing PostgreSQL Architecture

Core Architectural Principles

Before diving into specific components, it’s important to understand the fundamental principles that guide PostgreSQL’s design:

- ACID Compliance: PostgreSQL fully supports Atomicity, Consistency, Isolation, and Durability, ensuring reliable transaction processing.

- Extensibility: The system is designed to be highly customizable through its extension framework.

- Standards Compliance: PostgreSQL adheres closely to SQL standards while providing powerful extensions.

- Concurrency: The Multi-Version Concurrency Control (MVCC) mechanism enables high levels of concurrent access.

- Resilience: Write-Ahead Logging (WAL) ensures data integrity even during system failures.

Client-Server Model

PostgreSQL operates as a client-server database. This fundamental architecture separates the database engine (server) from the applications that need to access the data (clients).

The PostgreSQL Server (postmaster)

The postmaster is the first process that starts when PostgreSQL is initialized. This is the main controlling process that:

- Listens for client connections

- Creates backend processes to handle those connections

- Manages shared memory

- Coordinates background worker processes

For example, when you start PostgreSQL using the command:

pg_ctl start -D /var/lib/postgresql/14/dataThe postmaster process begins, reads configuration files, allocates shared memory, and begins listening on its designated port (typically 5432).

Backend Processes

When a client connects to PostgreSQL, the postmaster forks a new dedicated backend process (also called a “server process”). Each backend process serves exactly one client connection and exists solely for that connection’s lifetime.

For example, if three applications connect to your database:

Application A → Backend Process 1

Application B → Backend Process 2

Application C → Backend Process 3Each backend process operates independently, managing its own client connection and executing SQL statements within its own transaction context. This one-to-one relationship between connections and processes is central to PostgreSQL’s architecture.

Practical Example: Viewing Active Connections

You can observe this architecture in action by querying the pg_stat_activity view:

SELECT pid, usename, application_name, client_addr, state

FROM pg_stat_activity;This might return:

pid | usename | application_name | client_addr | state

-------+---------+--------------------+---------------+------------

29481 | appuser | Order Processing | 192.168.1.100 | active

29482 | analyst | Tableau Desktop | 192.168.1.101 | idle

29483 | webuser | Product API | 192.168.1.102 | activeEach row represents a separate backend process serving a specific client connection.

Memory Architecture

PostgreSQL’s memory architecture consists of two main areas: local memory (per-process) and shared memory (global).

Local Memory Areas

Each backend process allocates its own memory areas:

- Work_mem: Used for sorting and hash tables during query execution

- Maintenance_work_mem: Used for maintenance operations like VACUUM

- Temp_buffers: Used for temporary table operations

For example, when a query needs to sort data:

SELECT customer_name

FROM customers

ORDER BY purchase_total DESC;The backend process allocates memory from its work_mem allocation to perform the sort. If the data exceeds work_mem, it will spill to disk, significantly impacting performance.

Shared Memory

All PostgreSQL processes share access to several memory structures:

- Shared Buffers: The database’s primary cache for table and index data

- WAL Buffers: Temporarily stores Write-Ahead Log records before they’re written to disk

- Commit Log: Tracks transaction commit status

Shared Buffers Example

When a query needs to read data, PostgreSQL first checks the shared buffers:

Query: SELECT * FROM products WHERE product_id = 1234;

Execution Flow:

1. Check if page containing product_id 1234 is in shared buffers

2. If found (buffer hit), read directly from memory

3. If not found (buffer miss), read from disk into shared buffers

4. Return data to clientThe effectiveness of shared buffers can be observed through the hit ratio:

SELECT

sum(heap_blks_read) as heap_read,

sum(heap_blks_hit) as heap_hit,

sum(heap_blks_hit) / (sum(heap_blks_hit) + sum(heap_blks_read)) as ratio

FROM pg_statio_user_tables;A well-tuned PostgreSQL instance typically shows a hit ratio above 0.99 (99%).

Process Architecture

Beyond the postmaster and backend processes, PostgreSQL employs several specialized background processes:

Background Writer

The background writer periodically flushes dirty (modified) shared buffers to disk to prevent checkpoint processes from having to write too many buffers at once.

Operation:

1. Sleep for bgwriter_delay milliseconds (default: 200ms)

2. Scan shared buffer pool

3. Write up to bgwriter_lru_maxpages dirty pages to disk

4. RepeatThis process helps spread out I/O operations to avoid performance spikes.

Checkpointer

The checkpointer process ensures that all modified data is eventually written to disk, creating a “checkpoint” where all data is guaranteed to be consistent.

Operation:

1. Signal all backends to flush their WAL data

2. Write all dirty shared buffers to disk

3. Create a checkpoint record in the WALCheckpoints occur based on the settings checkpoint_timeout (time-based) and max_wal_size (WAL volume-based).

Autovacuum Launcher and Workers

The autovacuum launcher periodically starts autovacuum worker processes to clean up dead tuples and update statistics.

Example Scenario:

Table: orders (1 million rows)

Operations: 200,000 updates performed

Result: 200,000 old row versions now "dead"

Autovacuum Process:

1. Launcher detects table exceeds autovacuum threshold

2. Worker process scans table, identifying dead tuples

3. Worker removes dead tuples, making space available for reuse

4. Worker updates statistics for query plannerThis automatic maintenance is crucial for maintaining performance over time.

WAL Writer

The WAL (Write-Ahead Log) writer process periodically flushes WAL data to disk, ensuring durability even in case of system failure.

Operation:

1. Collect WAL data from WAL buffers

2. Write to disk

3. Signal backend processes their WAL data is safeThis mechanism allows PostgreSQL to achieve durability with minimal performance impact.

Storage Architecture

PostgreSQL organizes data in a hierarchical structure:

Database Cluster

At the top level is the database cluster, which is a collection of databases managed by a single PostgreSQL server instance. A database cluster is stored in a single directory, often called the data directory (PGDATA).

# Structure of a database cluster directory

$ ls -la /var/lib/postgresql/14/data/

drwx------ postgres postgres base/ # Databases

drwx------ postgres postgres global/ # Cluster-wide tables

drwx------ postgres postgres pg_commit_ts/ # Commit timestamp data

drwx------ postgres postgres pg_dynshmem/ # Dynamic shared memory

drwx------ postgres postgres pg_logical/ # Logical replication

drwx------ postgres postgres pg_multixact/ # Multitransaction status

drwx------ postgres postgres pg_notify/ # LISTEN/NOTIFY data

drwx------ postgres postgres pg_replslot/ # Replication slots

drwx------ postgres postgres pg_serial/ # Serializable transaction data

drwx------ postgres postgres pg_snapshots/ # Exported snapshots

drwx------ postgres postgres pg_stat/ # Statistics subsystem

drwx------ postgres postgres pg_stat_tmp/ # Temporary statistics files

drwx------ postgres postgres pg_subtrans/ # Subtransaction data

drwx------ postgres postgres pg_tblspc/ # Tablespace symlinks

drwx------ postgres postgres pg_twophase/ # Prepared transactions

drwx------ postgres postgres pg_wal/ # WAL files

drwx------ postgres postgres pg_xact/ # Transaction commit statusDatabases and Tables

Within the cluster, data is organized into databases, which contain schemas and tables.

Each table is stored in one or more files on disk. By default, each table with data volume under 1GB is stored in a single file named after its object identifier (OID). Tables larger than 1GB are divided into multiple 1GB segments.

Storage Hierarchy:

Database Cluster (PGDATA directory)

└── Database (directory in base/)

└── Table (file named by OID)

└── Pages (8KB blocks)

└── Tuples (rows)You can find the physical location of a table with:

SELECT pg_relation_filepath('customers');

-- Result: base/16384/16385Storage Pages

Each table file is divided into fixed-size pages (typically 8KB). A page is the basic unit of storage in PostgreSQL:

Page Structure:

┌─────────────────┐

│ Page Header │ Contains metadata about the page

├─────────────────┤

│ Item Pointers │ Array of pointers to tuple locations

├─────────────────┤

│ Free Space │ Available space for new/updated tuples

├─────────────────┤

│ Tuples (rows) │ The actual data stored in the page

└─────────────────┘Tuple Structure

Each tuple (row) contains:

- Header data (visibility information, etc.)

- NULL bitmap

- User data (the actual column values)

When a row is updated, PostgreSQL doesn’t overwrite the existing row. Instead, it:

- Inserts a new version of the row

- Marks the old version as obsolete

- Updates indexes to point to the new version

This approach enables PostgreSQL’s MVCC system.

Multi-Version Concurrency Control (MVCC)

MVCC is one of PostgreSQL’s most powerful features, allowing readers to see a consistent snapshot of the database without blocking writers.

How MVCC Works

When a transaction begins, PostgreSQL assigns it a transaction ID (XID). This XID is used to determine which row versions the transaction can see.

Example Scenario:

Time 1: Transaction A (XID 100) reads row R

Time 2: Transaction B (XID 101) updates row R, creating new version R'

Time 3: Transaction A reads row R again

Result: Transaction A sees the original version R both times,

maintaining transaction isolationPractical MVCC Example

Let’s see MVCC in action:

Terminal 1:

BEGIN;

SELECT balance FROM accounts WHERE id = 1;

-- Result: 1000

-- Wait here while Terminal 2 executes...

SELECT balance FROM accounts WHERE id = 1;

-- Result: Still 1000 (not 900) because of MVCC

COMMIT;Terminal 2:

BEGIN;

UPDATE accounts SET balance = 900 WHERE id = 1;

COMMIT;With MVCC, Terminal 1 continues to see the original balance of 1000 even after Terminal 2 has committed an update. This provides transaction isolation without locking.

Tuple Visibility

Each tuple has visibility information stored in its header:

xmin: The ID of the creating transactionxmax: The ID of the deleting transaction (or 0 if not deleted)cmin/cmax: Command IDs within the transaction

When a backend process reads a tuple, it applies visibility rules:

- Is xmin valid and visible to my transaction?

- Is xmax invalid or invisible to my transaction?

Only tuples passing these tests are returned to the client.

Transaction Processing

PostgreSQL’s transaction processing follows ACID properties:

Atomicity

Transactions are all-or-nothing. For example, a funds transfer:

BEGIN;

UPDATE accounts SET balance = balance - 100 WHERE id = 1;

UPDATE accounts SET balance = balance + 100 WHERE id = 2;

COMMIT;If any part fails, the entire transaction is rolled back.

Consistency

Transactions must leave the database in a valid state. For example, a foreign key constraint ensures consistency:

CREATE TABLE orders (

id SERIAL PRIMARY KEY,

customer_id INTEGER REFERENCES customers(id)

);

-- This fails if customer_id 999 doesn't exist in customers table

INSERT INTO orders (customer_id) VALUES (999);Isolation

PostgreSQL supports different isolation levels:

- Read Uncommitted (behaves like Read Committed in PostgreSQL)

- Read Committed (default)

- Repeatable Read

- Serializable

Each level provides stronger isolation guarantees at the cost of potential performance impact.

-- Setting isolation level for a transaction

BEGIN ISOLATION LEVEL REPEATABLE READ;

SELECT * FROM inventory WHERE product_id = 101;

-- Other operations...

COMMIT;Durability

Once a transaction is committed, its changes are permanent. This is achieved through Write-Ahead Logging:

- Changes are first written to the WAL

- Commit record is written to WAL and flushed to disk

- Client receives success confirmation

- Actual data pages are updated later

This ensures that even if the system crashes after commit but before data pages are updated, recovery can replay the WAL to restore committed changes.

Write-Ahead Logging (WAL)

WAL is central to PostgreSQL’s durability and crash recovery capabilities.

WAL Records

Each change to the database generates WAL records that describe the change in sufficient detail to recreate it if needed.

Example WAL Sequence:

1. BEGIN transaction XID 1234

2. INSERT into table 'customers' (id=42, name='Alice')

3. UPDATE table 'accounts' SET balance=900 WHERE id=1

4. COMMIT transaction XID 1234These records are written sequentially to WAL files in the pg_wal directory.

Checkpoint Process

Periodically, PostgreSQL performs a checkpoint, which:

- Flushes all dirty buffers to data files

- Records the checkpoint position in the WAL

- Creates a checkpoint record in the WAL

After a checkpoint, recovery only needs to replay WAL records after the checkpoint position.

Recovery Example

If the database crashes, recovery follows this process:

1. Start from most recent checkpoint

2. Scan forward through WAL files

3. Replay all changes from committed transactions

4. Ignore changes from uncommitted transactionsThis ensures that after recovery, the database contains exactly the changes from committed transactions, maintaining the durability guarantee.

Query Processing Pipeline

Understanding how PostgreSQL processes queries helps explain its architecture:

1. Parser

The parser converts SQL text into an internal parse tree representation, checking syntax.

SELECT name, price FROM products WHERE category = 'Electronics';becomes a tree structure representing the query’s logical components.

2. Rewriter

The rewriter applies transformation rules, including expanding views and applying row security policies.

-- View definition

CREATE VIEW active_products AS

SELECT * FROM products WHERE status = 'active';

-- Query using view

SELECT name, price FROM active_products WHERE category = 'Electronics';The rewriter transforms this to:

SELECT name, price FROM products

WHERE status = 'active' AND category = 'Electronics';3. Planner/Optimizer

The planner generates possible execution plans and estimates their cost.

For example, when executing:

SELECT customers.name, orders.order_date

FROM customers JOIN orders ON customers.id = orders.customer_id

WHERE customers.region = 'Europe';The planner might consider:

- Scan customers first, then look up matching orders

- Scan orders first, then look up customer details

- Use an index on customers.region

- Use a sequential scan if the region filter isn’t selective enough

It chooses the plan with the lowest estimated cost.

4. Executor

The executor runs the selected plan, fetching rows and performing operations.

You can observe this entire pipeline using EXPLAIN:

EXPLAIN ANALYZE

SELECT customers.name, SUM(order_items.quantity)

FROM customers

JOIN orders ON customers.id = orders.customer_id

JOIN order_items ON orders.id = order_items.order_id

WHERE customers.region = 'Europe'

GROUP BY customers.name;This reveals the exact execution plan chosen by the planner and its actual runtime performance.

Logical Replication Architecture

PostgreSQL 10+ includes built-in logical replication, which replicates data changes at the object level:

Publishers and Subscribers

The source database creates a publication:

CREATE PUBLICATION sales_pub FOR TABLE customers, orders, products;The target database creates a subscription:

CREATE SUBSCRIPTION sales_sub

CONNECTION 'host=source-db.example.com dbname=salesdb user=replication password=******'

PUBLICATION sales_pub;Logical Decoding

The publisher uses logical decoding to convert WAL records into logical change records.

WAL Record: "Update tuple at block 1234, offset 56"

Logical Record: "UPDATE orders SET status='shipped' WHERE id=42"These logical changes are sent to subscribers, which apply them to their copies of the tables.

PostgreSQL Architecture Diagram

Below is a comprehensive architectural diagram showing how all these components interact:

┌─────────────────────────────────────────────────────────────────────┐

│ PostgreSQL Instance │

│ │

│ ┌──────────────┐ ┌───────────────────────────────────────────┐ │

│ │ │ │ Background Processes │ │

│ │ Postmaster │ │ ┌─────────────┐ ┌────────────┐ ┌────────┐ │ │

│ │ (postgres) │────>│ │ Checkpointer│ │Autovacuum │ │ WAL │ │ │

│ │ │ │ │ │ │ Launcher │ │ Writer │ │ │

│ └──────────────┘ │ └─────────────┘ └────────────┘ └────────┘ │ │

│ │ │ ┌─────────────┐ ┌────────────┐ ┌────────┐ │ │

│ │ │ │ Background │ │ WAL Sender │ │ Stats │ │ │

│ │ │ │ Writer │ │ │ │Collector│ │ │

│ │ │ └─────────────┘ └────────────┘ └────────┘ │ │

│ │ └───────────────────────────────────────────┘ │

│ │ │

│ │ ┌─────────────────────────────────────┐ │

│ │ │ Shared Memory │ │

│ │ │ ┌─────────────┐ ┌─────────────────┐ │ │

│ │ │ │ Shared │ │ WAL Buffers │ │ │

│ ▼ │ │ Buffers │ │ │ │ │

│ ┌──────────────┐ │ └─────────────┘ └─────────────────┘ │ │

│ │ Client │ │ ┌─────────────┐ ┌─────────────────┐ │ │

│ │ Connections │ │ │ Clog │ │ Lock Tables │ │ │

│ └──────────────┘ │ │(Commit Log) │ │ │ │ │

│ │ │ └─────────────┘ └─────────────────┘ │ │

│ ▼ └─────────────────────────────────────┘ │

│ ┌──────────────┐ │

│ │ Backend │ ┌─────────────────────────────────────┐ │

│ │ Processes │ │ Storage │ │

│ │ ┌──────────┐ │ │ ┌─────────────┐ ┌─────────────────┐ │ │

│ │ │ Backend 1│<├──────┼─┤ Data Files │ │ WAL Files │ │ │

│ │ │(Parser, │ │ │ │(Tables, │ │(Transaction Log)│ │ │

│ │ │ Planner, │ │ │ │ Indexes) │ │ │ │ │

│ │ │ Executor)│ │ │ └─────────────┘ └─────────────────┘ │ │

│ │ └──────────┘ │ │ ┌─────────────┐ ┌─────────────────┐ │ │

│ │ ┌──────────┐ │ │ │Configuration│ │ System │ │ │

│ │ │ Backend 2│ │ │ │ Files │ │ Catalogs │ │ │

│ │ └──────────┘ │ │ └─────────────┘ └─────────────────┘ │ │

│ │ ... │ └─────────────────────────────────────┘ │

│ └──────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────────┘Real-World Architecture Example

Let’s examine a complete example showing PostgreSQL’s architecture in action:

Scenario: Order Processing System

- Client Connection:

A client application connects to PostgreSQL to process a new order.

psql -h db.example.com -U order_processor -d sales- Backend Process Creation:

The postmaster creates a dedicated backend process for this connection. - Transaction Processing:

BEGIN;

-- Check inventory

SELECT qty_available FROM inventory WHERE product_id = 101;

-- Result: 25 units available

-- Create order

INSERT INTO orders (customer_id, order_date)

VALUES (42, CURRENT_DATE) RETURNING id;

-- Result: 1001 (new order ID)

-- Add order items

INSERT INTO order_items (order_id, product_id, quantity, price)

VALUES (1001, 101, 5, 29.99);

-- Update inventory

UPDATE inventory SET qty_available = qty_available - 5

WHERE product_id = 101;

COMMIT;- What Happens Internally: a. Transaction Initialization:

- Backend assigns transaction ID (XID), e.g., 9876Creates a snapshot of the database state

- Parser converts SQL to internal representationPlanner determines most efficient execution planExecutor fetches data using shared buffers

- New tuples created with xmin = 9876WAL records written for each changeIndex entries updated

- WAL commit record created

- WAL flushed to disk (durability)

- Transaction status set to committed in pg_xact

- Client notified of successful commit

- Concurrent Access:

While this transaction is running, other clients can still:

- Read consistent inventory data (MVCC)

- Process orders for different products

- Run reports without blocking

- Background Processes:

- WAL Writer ensures WAL data is persisted

- Background Writer gradually writes modified buffers

- Checkpointer eventually creates a consistency point

- Autovacuum later cleans up old row versions

Conclusion: Why PostgreSQL’s Architecture Matters

PostgreSQL’s sophisticated architecture delivers several key benefits:

- Reliability: The WAL system ensures no committed transactions are lost, even during crashes.

- Concurrency: MVCC allows high levels of concurrent access without excessive locking.

- Scalability: The process-per-connection model, combined with connection pooling, scales to thousands of concurrent users.

- Extensibility: The modular design allows PostgreSQL to add features without disrupting its core architecture.

- Consistency: ACID compliance ensures data integrity even in complex scenarios.

Understanding PostgreSQL’s architecture helps you:

- Configure the database optimally for your workloads

- Troubleshoot performance issues effectively

- Plan for scaling and high availability

- Appreciate the design decisions that make PostgreSQL both powerful and reliable

By mastering these architectural concepts, you’ll be well-equipped to leverage PostgreSQL’s full potential in your applications.