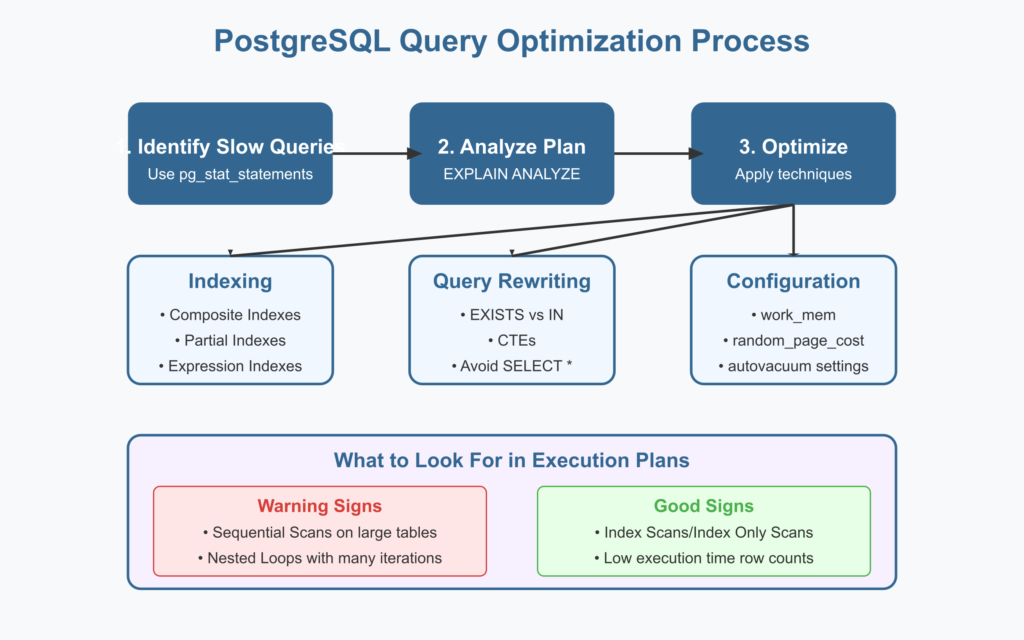

As your PostgreSQL databases grow and your applications scale, you’ll inevitably face performance challenges. That’s where query optimization comes in – it’s the art of making your database queries run faster and use fewer resources. In this post, I’ll share some advanced optimization techniques that I’ve learned as a database administrator, explained in simple terms that even beginners can understand.

What You’ll Learn

- Why query optimization matters

- How to read and interpret PostgreSQL’s execution plans

- Essential indexing strategies beyond the basics

- Query rewriting techniques for better performance

- Common optimization pitfalls and how to avoid them

- Advanced configuration settings that impact query performance

Why Query Optimization Matters

Imagine you’re looking for a specific book in a library. Without organization, you’d have to check every single book until you found the right one. But with a catalog system and proper shelving, you can go directly to the right section and find your book quickly.

Database query optimization works the same way. Without it, PostgreSQL might need to scan through millions of rows to find what you’re looking for. With proper optimization, it can go straight to the data in milliseconds.

The benefits of query optimization include:

- Faster response times for your applications

- Reduced server resource usage (CPU, memory, disk I/O)

- Better scalability as data and user volumes grow

- Lower infrastructure costs

- Happier users who don’t have to wait for slow queries

Understanding Execution Plans with EXPLAIN ANALYZE

Before optimizing anything, you need to understand how PostgreSQL executes your queries. The EXPLAIN ANALYZE command is your best friend here.

Let’s look at a simple example. Imagine we have a table of orders:

CREATE TABLE orders (

order_id SERIAL PRIMARY KEY,

customer_id INTEGER,

order_date DATE,

total_amount DECIMAL(10,2),

status VARCHAR(20)

);

-- Insert some sample data

INSERT INTO orders (customer_id, order_date, total_amount, status)

SELECT

floor(random() * 1000)::integer,

current_date - (random() * 365)::integer,

(random() * 1000)::decimal(10,2),

(ARRAY['pending', 'completed', 'shipped', 'cancelled'])[floor(random() * 4 + 1)]

FROM generate_series(1, 100000);

-- Create an index on customer_id

CREATE INDEX idx_orders_customer_id ON orders(customer_id);

Now, let’s run a query with EXPLAIN ANALYZE:

EXPLAIN ANALYZE

SELECT * FROM orders

WHERE customer_id = 42

AND order_date > '2023-01-01';

This might produce output like:

QUERY PLAN

----------------------------------------------------------------------

Index Scan using idx_orders_customer_id on orders

(cost=0.29..8.31 rows=5 width=23) (actual time=0.019..0.023 rows=3 loops=1)

Index Cond: (customer_id = 42)

Filter: (order_date > '2023-01-01'::date)

Rows Removed by Filter: 2

Planning Time: 0.096 ms

Execution Time: 0.042 ms

Let’s break this down:

- Index Scan: PostgreSQL is using our index on customer_id

- Index Cond: The condition applied using the index (customer_id = 42)

- Filter: An additional filter applied after the index lookup (order_date > ‘2023-01-01’)

- Rows Removed by Filter: PostgreSQL found 5 rows with customer_id = 42, but 2 didn’t meet the date condition

- Execution Time: The query took 0.042 milliseconds to execute

When analyzing execution plans, look for these warning signs:

- Sequential Scans on large tables

- High costs in the cost estimates

- Many rows being processed but few being returned

- Nested Loops with many iterations

- Hash joins with large tables and high memory usage

Essential Indexing Strategies

Indexes are the most powerful optimization tool in your toolkit. Here are some advanced indexing strategies:

1. Composite Indexes for Multi-Column Queries

When queries frequently filter on multiple columns together, create a composite index:

CREATE INDEX idx_orders_customer_date ON orders(customer_id, order_date);

Now our previous query can use this index more efficiently:

QUERY PLAN

----------------------------------------------------------------------

Index Scan using idx_orders_customer_date on orders

(cost=0.29..8.31 rows=5 width=23) (actual time=0.015..0.018 rows=3 loops=1)

Index Cond: (customer_id = 42 AND order_date > '2023-01-01'::date)

Planning Time: 0.088 ms

Execution Time: 0.035 ms

Notice how both conditions are now applied as Index Cond, eliminating the Filter step.

2. Partial Indexes for Specific Subsets

If you frequently query a specific subset of data, partial indexes can be very efficient:

CREATE INDEX idx_orders_pending ON orders(order_date)

WHERE status = 'pending';

This index only includes pending orders, making it much smaller and more efficient for queries like:

SELECT * FROM orders

WHERE status = 'pending'

AND order_date > '2023-01-01';

3. Expression Indexes for Function Queries

If you often query using functions or expressions, index the expression itself:

CREATE INDEX idx_orders_lower_status ON orders(LOWER(status));

This index will be used for case-insensitive searches:

SELECT * FROM orders

WHERE LOWER(status) = 'completed';

4. INCLUDE Columns for Index-Only Scans

To avoid table lookups after finding a match in an index, you can include additional columns:

CREATE INDEX idx_orders_customer_include ON orders(customer_id)

INCLUDE (order_date, status);

This allows PostgreSQL to answer certain queries directly from the index without accessing the table.

Query Rewriting Techniques

Sometimes, changing how you write your queries can dramatically improve performance:

1. Prefer EXISTS over IN for Subqueries

Compare these two queries:

-- Using IN (potentially slower)

SELECT * FROM customers

WHERE customer_id IN (

SELECT customer_id FROM orders

WHERE total_amount > 1000

);

-- Using EXISTS (often faster)

SELECT * FROM customers c

WHERE EXISTS (

SELECT 1 FROM orders o

WHERE o.customer_id = c.customer_id

AND o.total_amount > 1000

);

The EXISTS version often performs better because it can stop scanning orders as soon as it finds a match for each customer.

2. Use Common Table Expressions (CTEs) for Readability and Optimization

CTEs can make complex queries more readable and sometimes more efficient:

WITH high_value_orders AS (

SELECT customer_id, SUM(total_amount) as total_spent

FROM orders

GROUP BY customer_id

HAVING SUM(total_amount) > 10000

)

SELECT c.name, hvo.total_spent

FROM customers c

JOIN high_value_orders hvo ON c.customer_id = hvo.customer_id

ORDER BY hvo.total_spent DESC;

3. Avoid SELECT * When Possible

Retrieve only the columns you need:

-- Instead of this:

SELECT * FROM orders WHERE customer_id = 42;

-- Do this:

SELECT order_id, order_date, status FROM orders WHERE customer_id = 42;

This reduces I/O and memory usage, especially for tables with many columns or large text fields.

4. Use LIMIT for Pagination

When displaying results to users, implement pagination:

SELECT order_id, order_date, total_amount

FROM orders

ORDER BY order_date DESC

LIMIT 20 OFFSET 40; -- Page 3 with 20 items per page

For more efficient pagination with large offsets, consider keyset pagination:

SELECT order_id, order_date, total_amount

FROM orders

WHERE order_date < '2023-05-10' -- Last date from previous page

ORDER BY order_date DESC

LIMIT 20;

Common Optimization Pitfalls

Watch out for these common mistakes:

1. Over-Indexing

Creating too many indexes can slow down write operations and waste disk space. Focus on indexes that support your most frequent or critical queries.

2. Using Functions on Indexed Columns

This prevents index usage:

-- Bad (won't use index on order_date)

SELECT * FROM orders

WHERE EXTRACT(YEAR FROM order_date) = 2023;

-- Better (can use index on order_date)

SELECT * FROM orders

WHERE order_date BETWEEN '2023-01-01' AND '2023-12-31';

3. Implicit Type Conversions

Mixing data types forces PostgreSQL to perform conversions:

-- Bad (forces conversion from text to integer)

SELECT * FROM orders WHERE customer_id = '42';

-- Good (uses native integer comparison)

SELECT * FROM orders WHERE customer_id = 42;

4. Not Analyzing Tables Regularly

As tables grow, statistics become outdated:

-- Run this regularly on important tables

ANALYZE orders;

Advanced Configuration Settings

Finally, here are some PostgreSQL configuration parameters that can impact query performance:

1. work_mem

This setting determines how much memory PostgreSQL can use for sorting and hash operations:

-- Check current setting

SHOW work_mem;

-- Set for current session

SET work_mem = '64MB';

Increase this for complex queries with many sorts or hash joins, but be careful not to set it too high as each operation gets its own allocation.

2. effective_cache_size

This tells the query planner how much memory is available for disk caching:

SHOW effective_cache_size;

Set this to about 75% of your total system memory for dedicated database servers.

3. random_page_cost

This parameter influences whether PostgreSQL prefers index scans or sequential scans:

-- For SSDs, consider lowering this

SET random_page_cost = 1.1;

The default is 4.0, but for fast SSDs, values between 1.1 and 2.0 often work better.

4. Autovacuum Settings

Proper vacuuming keeps tables optimized:

SHOW autovacuum_vacuum_threshold;

SHOW autovacuum_vacuum_scale_factor;

For tables with frequent updates, consider table-specific settings:

ALTER TABLE orders SET (

autovacuum_vacuum_threshold = 1000,

autovacuum_vacuum_scale_factor = 0.05

);

Conclusion

Query optimization is both an art and a science. Start by understanding your workload and identifying the slowest queries using tools like pg_stat_statements. Use EXPLAIN ANALYZE to understand how these queries are executed, then apply the techniques we’ve discussed to improve their performance.

Remember that optimization is an iterative process. Make one change at a time, measure its impact, and then decide whether to keep it or try something else. With practice, you’ll develop an intuition for what works best in different situations.

Happy optimizing!

Hi